Study Bay Coursework Assignment Writing Help

Synchronization is a mechanism which helps to use shared memory resources in an operating system. In the current world, most of the computers are multi-tasking computers. So these computers can do more than one process at the same time. And the networking technology is has become one of the most modern and developed technologies in the current worlds. So both these computer and network technologies work together in order to achieve common goals. While working together these technologies have to share resources such as memory at the same time. While sharing memory, there can be many problems. To avoid these problems synchronization mechanisms were implemented.

- Concurrency

As it is mentioned above, operating systems share memory in order to achieve common goals. The data can be shared among two processes in one operating system or two operating systems over a network. However there are sometimes that the shared data can be accessed by only one process at a time. As a real life example, shared bathroom can be taken. A shared bathroom can be used by many people but it can be used by only one person at the time. If someone is using the bathroom, others have to wait until that person to come out. Then the one of the waited people can use the bathroom. Another real life example for this is shared streets or junctions. In shared streets, people can use only one direction at one time. If the shared street is used by any direction, people from other direction have to wait till others to stop using the shared street. Otherwise there can be clashes and a lot of problems. Like these, when the operating systems use shared memory, sometimes only one process can access the data at one time. So this is called concurrency.

There must be some mechanisms to avoid this concurrency. In the above given two real life examples, the shared bathroom can use a lock in its door to make sure that only one person is using the bathroom at one time. In the shared streets, traffic lights can be used to make sure that only one direction is using the street and avoid the clashes. Like this, locking and synchronizations are the mechanisms that can be used to avoid concurrency in operating systems.

- Properties of systems with concurrency

There are some properties of the systems with concurrency. Those properties are, multiple actors, shared resources and rules for accessing the resources. In the above given two real life examples, the multiple actors are people and vehicles while the shared resources become bathroom and street. The rules for accessing these shared resources are one person at one time for the bathroom and one direction for one time for the shared streets. When talking about these three properties in relating to the operating systems, it can be explained like this. Here, the multiple actors are processes within the operating system or threads in processes. Shared memory, global variables and shared devices can be taken as the examples for the shared resources property while locking and semaphores techniques become the rules for accessing the shared resources in operating systems. These are the three properties of the systems with concurrency and it helps to understand what the concurrency is.

- Situations with no risk of concurrency

In the current computer technology, there are some situations that there is no risk of concurrency but the shared resources are accessed by more than one processes or threads at the same time. Those situations are as follows,

- No shared memory or communication :- Here, the processes or threads do not have shared memory or communication. So those processes or threads work only with its private memory.

- Shared memory with read-only access :- Here, the shared memory can be accessed by one or more processes or threads at the same time. But in this situation, the shared memory is only available for processes or threads to read-only. So the shared memory cannot be modified in this situation.

- Copy-on-Write (COW) :- Here, the shared memory is accessed by one or more processes or threads at the same time and each process or thread has a separate copy of the shared memory. So each process is accessing its own copy of the shared memory and there is no risk of concurrency.

- Situation with risk of concurrency

As well as the situations with no risk of concurrency, there are some situations with risk of concurrency in the current operating systems. Those situations can be explained as follows,

- Using of shared memory without any synchronization :- Here, if the shared memory is accessed by more than one processes or threads at the same time without and synchronization (without having any separate copy for each process or threads), there is a risk of concurrency.

- Any modification to the shared memory :- If the shared memory is accessed by more than one processes or threads, and at least one of the processes or threads makes any changes to the shared memory, then there is a risk of concurrency.

These are the situations with risk of concurrency. If one of these happens, then there is a risk of concurrency in the system. If a concurrency is happened, then there may be a lot of problems in the system. And the risk of happening the concurrency is known as race condition.

- Race condition

Under this topic, the race conditions is discussed by using an example. Here, an example of a bank account is taken to discuss the race condition. In this example, there are two peoples and a bank account. Execution of the code of this example as follows,

account.balance = £200;

int withdraw (account, amount = £50){

balance = account.balance;

balance -= amount;

int deposit (account, amount=£100){

balance = account.balance;

balance += amount;

account.balance = balance;

return balance;

}

account.balance = balance;

return balance;

}

For this example, the two persons are named as person1 and person2. The code executed by the person1 is coloured with blue colour while the code executed by the person2 is coloured with red colour. This is a sketch of the programme but not coded with any programming language. In the very first line, the balance of the account is set to £200. The rest of the code is explained as follows,

Line 1 :- person1 starts executing the code and calls the withdraw() by giving the account and £50 of amount as the parameters.

Line 2 :- person1 reads the balance from the account class and assigns the value of balance variable in account class to his balance variable in his withdraw().

Line 3 :- person1 modifies the value of the balance variable in his withdraw() by subtracting the value of amount. So the value of the balance variable in his withdraw() becomes £150.

Line 4 :- In this line, the person2 starts executing the code by calling his deposit() by parsing account and £100 of amount as the parameters.

Line 5 :- Here, person2 reads the value of the balance variable in account class and assigns it to the balance variable in his deposit(). Here, still the value of balance variable in account class is £200 as the person1 has not updated the balance in account class.

Line 6 :- Person2 modifies the value of the balance variable by adding the value of amount variable to it. So the value of balance variable in deposit() becomes £300.

Line 7 :- person1 updates the value of the balance variable in the account class as £150.

Line 8 :- person 1 returns the value of the balance variable in withdraw()

Line 9 :- person2 updates the value of the balance variable in the account class as £300

Line 10 :- person2 returns the value of the balance variable in his deposit()

After executing this code 2 peoples complete their transactions by leaving the final value of the balance variable in account class as £300 while the real value of the balance variable in account class has to be £250. So there is clear error in the final output of the process and this is called as the race condition.

- Manage concurrency

Manage Synchronization means, use synchronization mechanism programs to write rues for control concurrency situations. One of these rules are as follows:

- Atomicity

Atomicity allows one threat to access data to manipulate at a single situation. In another way, will allows no other threats to change data while one is running. This will either will lock the threat is in progress of manipulating the record or let other records to be waiting while one record access record.

The other rule is conditional synchronization

In this explain threats will be in a particular order to access the record. When threats arrives to access record rule will check the order and add to the queue and let wait until the turn of the threat to access the records.

All above access methods will be easy to implement when can identify which is the critical part of the process should allow to access at a time. i.e. When Person A accessing account balance person B not allows to access balance at the same time. If extract further as bellow.

1.int withdraw (account, amount) {

2.–int balance = account.balance;

3.–balance -= amount;

4.–account.balance = balance;

5.–return balance;

6.}

Line 1: will initialize threat and pass external parameter values into the threat withdraw as “account and amount” in this situation.

Line 2: will declare balance variable locally to retrieve stored account balance from the database for threat to process and pass account balance in the account table “account.balance”.

Line 3: will manipulate “balance” variable value (in this situation, we will deduct since it is withdrawal) from the “amount” has been pass form external parameter. “-=” notation for the deduction.

Line 4: will update database with new balance value after withdrawal amount deducted from original balance. “balance” which is a local variable holding the new balance transfer to the “account.balance” and update record with new balance value.

Line 5: will return new “balance” value to the screen if necessary

Line 6: will terminate the process of withdraw by “}”

There will be no concurrent when two threat access lines 1, 5 and 6. But if any threat try to access lines 2, 3 and 4 will be given incorrect information for one of the threats. Therefore from line 2 to 4 is very critical to let access only for one threat at any given time of the process as explain bellow.

- int withdraw (account, amount) {

——————————————–

–int balance = account.balance;

–int balance = account.balance;- –balance -= amount;Critical Section

- —account.balance = balance;

——————————————–

- –return balance;

- }

This identified sections will call as a critical section in another word, no other threats will allows to access while one threat is using at any given time because data will be manipulate when access line 2, 3 and 4.

- Critical section

Critical section is set of codes access shared resources and there are several ways of developing critical section such as:

Locks, Semaphores, Monitors and Messages. In this report will describe locks and semaphores how behave and how priority will work.

- Locks in synchronization

First method of synchronization is locks. Locking is a very primitive system been used. Lock will lock the threat in the critical section while it is processing the record. Mainly lock has two stats Held and Not Held.

At the Held state, one threat is in the critical section and at the Not Held state no threats in the critical section and can prioritised threats to have access.

Also locks having two operations Acquire and Release. Which threat will request the lock to be held to access critical section and once threat previously use critical section release the lock acquired thread will get a chance and change the state as held. When threat finish it process in the critical section must release the lock to use by the other threats which are waiting in the queue.

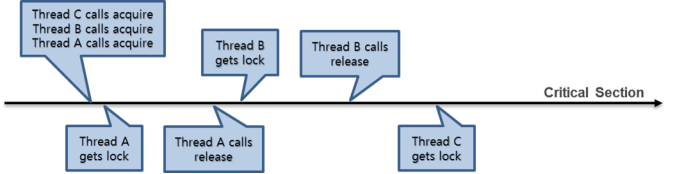

Figure

The above figure has explain how acquire and release will behave at the critical section access, i.e. threats A, B and C request access permission to critical section at the same time by Acquire operation and threat A will grant access with the lock Held state enable. Once threat complete the process will call release operation to change the lock state to Not Held and lock will change state to Held with threat B and so on will be proceed to complete different threat request at the critical section.

By using previous example take a look where to use Acquire and Release operations threat.

- int withdraw (account, amount) {

—acquire (lock); // Request lock to held to access critical section

- –int balance = account.balance;

- –balance -= amount;Critical Section

- —account.balance = balance;

—release (lock); // Release lock after complete critical section access

- –return balance;

- }

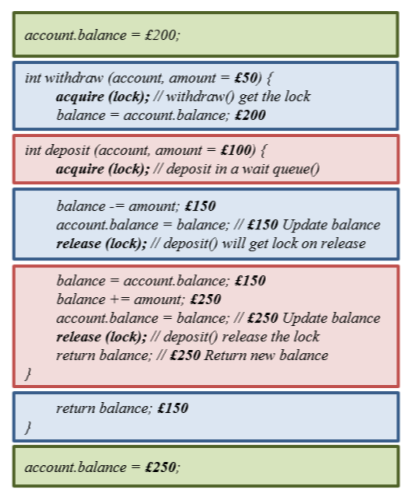

With further explanation considering previous withdraw and deposit situation Figure 2. Overcome concurrency situation had occurred while both people access same account balance to manipulate the amount.

Figure

When implement locks use Boolean variable to check is lock held TRUE or FALSE? Acquire operation will keep other threats in the waiting state while lock held values is TRUE. If lock held VALUE is false, will change to TRUE with requested threat. When the threat completed process in critical section will call release operation and change lock held value as FALSE to make lock available for other threats will show in below construct.

First create a global Boolean type variable held values TRUE or FALSE to check is lock holding by a threat or not and by default lock will not hold by any threat and value will be FALSE.

structure lock {

bool held; // initial value FALSE

}

Construct acquire by requesting a lock to be held and if lock ïƒ held value TRUE request threat will wait in the queue for lock to be released. If lock ïƒ held is FALSE and by acquire change value of lock ïƒ held to TRUE and take the control of the critical section access.

void acquire (lock) {

while (lock ïƒ held)

; // Hold other threats in the wait queue

lock ïƒ held = TRUE; // Once get the lock, change lock value to TRUE

}

Construct release operation simply will change the start of lock ïƒ held value to false and allows other threat to access critical section for the next process.

void release (lock) {

lock ïƒ held = FALSE; // When complete process in the critical section, change lock value to FALSE

}

- Semaphores in synchronization

This is the second method of synchronization. This method is a very basic and powerful mechanism but difficult Implementation method and use blocking threats to access critical section instead of locking threats while using critical section.

Semaphores has two operations and they are:

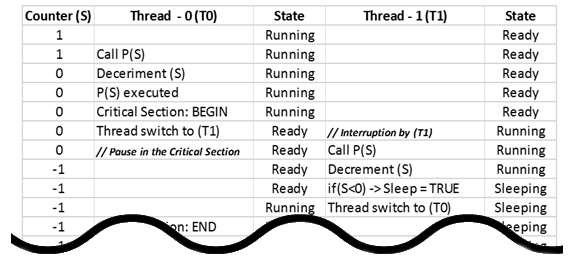

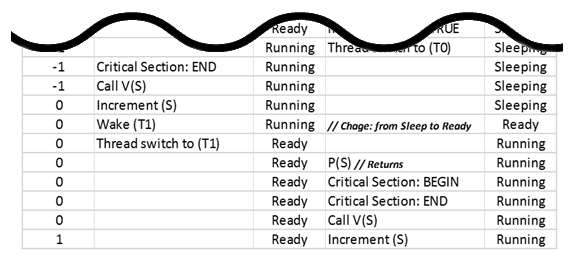

- wait (semaphore) or P() – First of all as soon as threat request access permission P() or wait (semaphore) will decrease the counter values which is “1” by default. Then check is counter value become “0” or “<0″ after decrease? Meaning, if counter value is “0” was “1” and one threat had an opportunity to access critical section and if the second threat is try to access critical section now has to wait and will change the state to sleep. If counter value was “1” after decrease means two threats had opportunities since existing threat use one of available semaphores will be one left for other threats to use explains in Figure ………………….

- signal (semaphore) or V() – Once a threat complete process in the critical section will call V() or signal (semaphore) and will increase counter value by “1”. i.e. if a P() had decrease counter value “0” while it was in critical section and no other threats can access, but second threat has decreased to “-1” while requesting permission to access critical section and waited. Also since second threat is still waiting to get permission it goes to sleep mode, hence V() will wake up threat in a sleep to start it process in critical section explains in Figure ………………………………….

Figure ………………..

Figure

Figure

- Readers/Writers synchronization

This is another powerful method of synchronization. In this method, the data is accessed by two different ways. Those two ways are, readers and writers. Here, the readers only read files and writers update file contents or data. There are four (4) rules while using the readers/writers synchronization methods and they are as follows,

- If any reader(s) read the file and no writer is pending, the next reader can read the file without waiting.

- Writers have to wait till the reader(s) finish reading file. After readers finish reading, writer(s) will can start writing.

- If any writer is writing to the file, acquiring readers have to wait till writer finish writing.

- If one writer is writing to the file and if both readers and writers are waiting, at this situation priority will be given for writers who are waiting. Therefore readers will wait till all writers to compete writing and then readers will start reading. So readers can read most updated file.

- Future trends of synchronization

In the current situation, locks and semaphores are widely used in order to prevent the concurrency. So it is very important to deal with the codes in critical section as otherwise there will be a lot of problems with the reliability of information systems. However using of systems with the locks are not time efficiency. So in the current situation of the computer technology, there is a trend of implementing lock free systems. According to the previous example of shared street, the shared street has to be controlled by using traffic lights. So on the shared streets, only one direction is allowed at one time. So the other directions are blocked at the same time. But there is a technique that does not need of using any traffic lights on the shared street. Here, fly over technique can be used and avoid blocking the directions. So all the directions on the shared street can be used at once without having any problem. But here, this technique may has some more waiting time as the length of the street can be increased while using fly over technique. Like in this example, lock free systems can be implemented in information systems. Semaphore method can be taken as an example for lock free system. But here as well, the processes have to wait sometimes as in the give example of fly over technique on shared streets. The weakness of this method is that lock free and wait free features cannot be implemented in the same information system. So only one of these methods (lock free or wait free) can be implemented in information system.